Why we’re going all-in with Leap Motion’s Interaction Engine

We decided a long time ago to adopt a UI paradigm of direct manipulation with hands for almost every UI in Holos. There were several reasons for this, mainly richness of interaction as well as ease of learning for users. Hands are natural — just reach out and touch the world. It’s also fairly future proof — most people have hands, and pretty much always will. This gave us some challenges through, pretty much all of them coming from the fact that we want to be multi-platform. We’d need a single system that provides hand representations on any VR platform, whether it had real finger-tracking or not.

To solve this, we started building our own common hand model. The idea was to make a model (meaning code and mesh/skeleton) of a hand that we could design all interactions around. Adding support for new hardware would then be as simple as writing a new input module to funnel the inputs into the hand model. Nothing would need to change on the widget/interaction side. It would be a huge undertaking but ultimately worth it to bring rich hand interactions to every platform we could. The old version of Holos was a laser-pointer UI, so basically none of this was implemented.

The road to Interaction Engine started early — I actually originally based our common hand model off of the Leap Rigged hand that everyone is so familiar with — the grey low-poly one. We wanted finger-tracked UIs to be a first class citizen because of their increased presence and degrees of tracking freedom, so starting from there and adding backwards compatibility for tracked controllers made the most sense. This did mean though that we needed a way to drive hand animations from controller input, which I wound up doing with motion capture and a complex set of blend shapes. I used this model to build things like buttons, wearable virtual interfaces, as well as animated colliders and physics+grabbing ability. It was working well enough. You could push buttons and pick up things but it felt like the entire package was definitely suffering from the ‘jack of all trades, master of none’ effect.

Writing things like gesture detectors and making passable interactions between tracked fingers and objects was a challenge. Early attempts would work well either with finger tracking or with hand controllers, but rarely both. I wound up writing a boolean in the code that I could check against to see if I was using finger-tracking or backwards-compatibility mode for hand-held controllers. Using this I began employing the Detector system from the Leap Core assets to do things like detect pinches, finger extension, and even dictate tutorial control flow. I had to make my own custom detector ‘wrappers’, which would use the high-quality Leap components when finger tracking was available, or my own custom hackjob scripts for backwards-compatibility mode. For reference, this was long before the release of the latest Interaction Engine with support for Vive & Touch controllers.

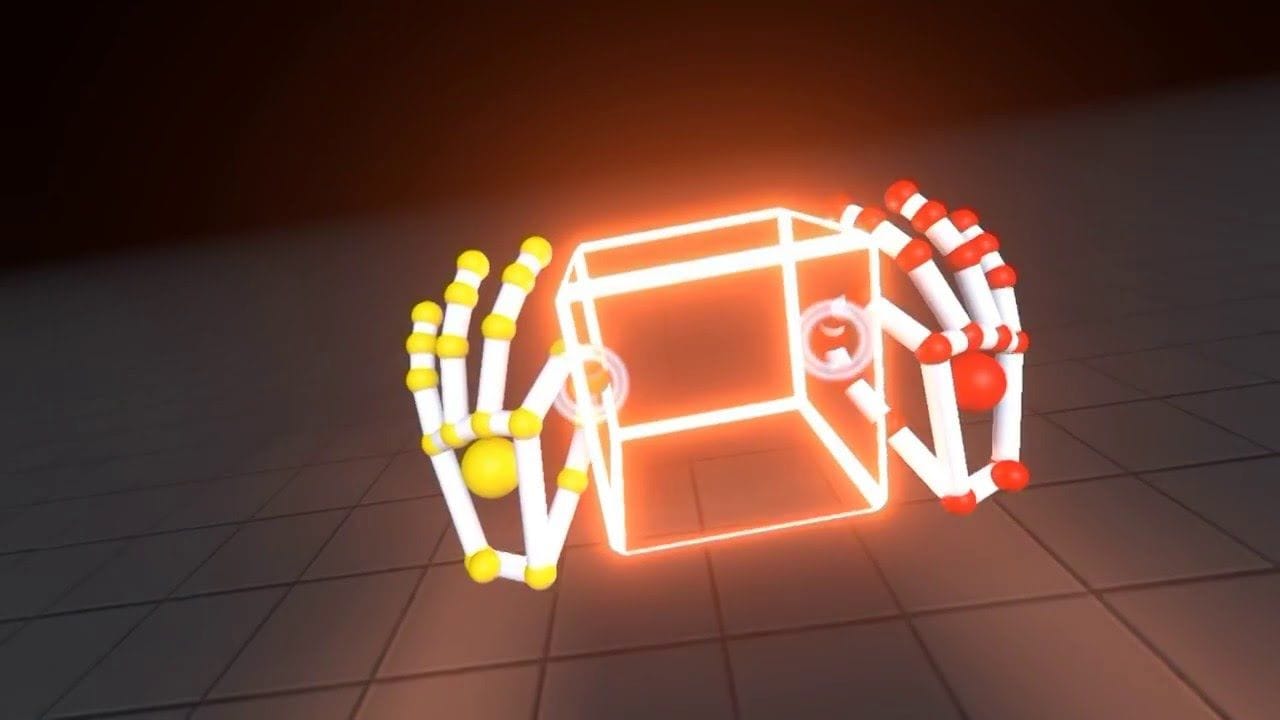

At this point, I still wasn’t using Interaction Engine. I was initially happy with the performance of my GrabbableItem wrapper class, which used a single Sphere collider for grab detection, and capsule colliders for fingers. Eventually though, the limitations started becoming obvious. It worked well for hand-held controllers, but for finger-tracking it paled in comparison to the Interaction Engine. No soft-contact system meant that just poking or pushing an object could send it flying out of control. I’d pondered making my own soft contact system, having encountered the concept in some old video games I’d played long ago. But once again, it made sense to simply import the Interaction Engine and use its strengths for picking up and poking things when available instead of rolling my own for both cases. The codebase ballooned in complexity but the interactions were as awesome as we could make them, which was of course, the primary goal.

Then, an Interaction Engine update dropped. It offered some insane advantages — lots of general refactoring to make systems more logically coherent and provide better access to events like hover and contact. It also came with new widgets, a graphic renderer, and most importantly for this discussion — backwards compatibility for hand controllers. I dug in when the announcement was made and I must say, I was very impressed with it. At the time though, it seemed like too much of a task to go all-in on it. I’d have to cut out a massive amount of code and test for all the inevitable side-effect bugs that could crop up. Not to mention that their backwards-compatibility support didn’t have one really nice thing our in-house system has — a fully animated hand model that is driven by the controller inputs. I was torn, I really wanted to just make the switch, but with our current resources it seemed like too much.

But as of today, that’s changing. As I work on some of the new features, I’m really needing 2-handed interactions (our system doesn’t support that yet), and the soft-contact system is such a huge benefit for manipulating objects with your hands that I struggle to summon the words to do it justice. The new hover and grasp APIs give so much more information than ours, and in-general the level of quality is a huge step above anything I could make myself in a reasonable timeframe. Switching to the Graphic Renderer will make our drawcalls reasonable and free up those sweet, sweet processor cycles for things like world customization (there are some rendering related performance pitfalls there that are inherent to world customization — static worlds are easier to optimize for prettiness, but when you let users make their own world you need to gently guide them away from doing things like putting dynamic lights everywhere. Also be militant about batching)

So I’m deprecating our in-house interaction system, and replacing all of it with Interaction Engine. It will be a bunch of work up-front but the work it will save in the long run is absolutely massive and it will raise the quality of Holos for users of skeletal hand tracking and hand-held controllers alike. There’s really no reason not to.

Holos is still in closed beta, since it’s still heavily in development. Visit holos.io to apply for the beta if you’re so inclined.